When I got into the investments business well over 40 years ago, nobody had figured out a way to actually measure the risk of an investment. But several people, notably some professors at well-known business schools, were working on the idea that risk could be considered the same as variability of periodic return. Even better, if these academics could prove investment returns were “normally distributed” over time, i.e. if the periodic returns (daily, weekly, monthly or annually) could be put on a time-chart with the results looking something like a bell-shaped curve, then risk (variability of return) could be accurately measured. As it turned out, investment returns, over time, looked almost like a bell shaped curve, except that there were more periods of excessively high or low returns than expected. But, the results were close enough for these mathematicians to believe they could measure risk by looking at the average (mean) long-term return and compare it to each of the individual periodic returns, then compute the average difference between the two (variance). This became known as mean-variance analysis (or what might be called return/risk analysis).

Standard deviation is a fancy statistical term meaning the maximum variance that takes place no more than 68% of the time. The other 32% of the time the variance would be greater. For example, if common stocks have a mean (average) long-term, annualized return of 10% and a standard deviation of 15%, then 68% of the time the annual returns for stocks will be between +25% and -5% (10% plus or minus 15%). The rest of the time the annual returns will be greater than 25% or less than -5%. Bonds might have a mean annual return of 5% and a standard deviation of 5%, implying 68% of the time the annual return of bonds will fall between 10% and 0%. Under this definition of risk, even supposedly risk-free T-bills have risk. The average return of T-bills might be 3% per year, but in any given year, the return may be more or less than 3%.

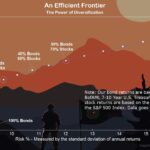

The fascinating thing is, when you compare the average return of all individual classes of securities (including real estate, gold, foreign currency, etc.) to their respective risks (variance of returns), they are all proportional. That is, the greater the return, the greater the risk. So, there is no magic to the investment business. The more risk you take, the greater the expected return; BUT, not necessarily the greater the actual return. If a person makes an investment that historically (say, based on 40 years of data), has provided a return of 10% (like common stocks), this does not mean that the annual return will be 10% per year over the next 2, 5, or 10 years. That’s where variance comes in. Fortunately, variance-of-return is a function of the square-root of time. So, the annualized variance of a class of securities held for 4 years will have only twice (not 4 times) the annualized variance (risk) of a holding period of 1 year. If stocks have an annual variance of 15%, but someone holds a stock portfolio for 40 years, then the annualized variance of return (risk) will turn out to be 15% divided by the square-root of 40, or only 2.4%!

This is why an investor’s portfolio should always be allocated between high-risk and low-risk securities according to their time-horizon (expected investing period).